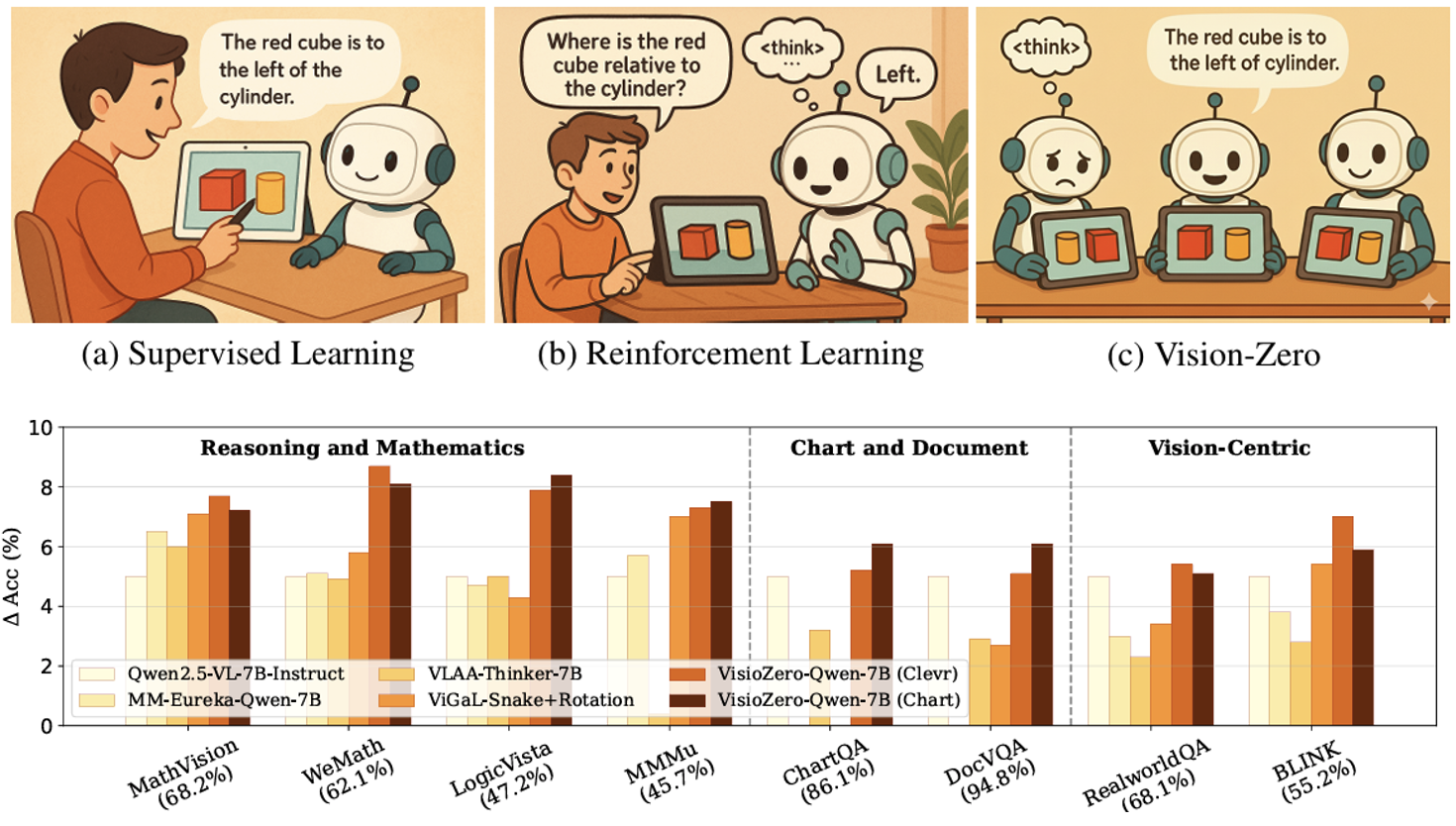

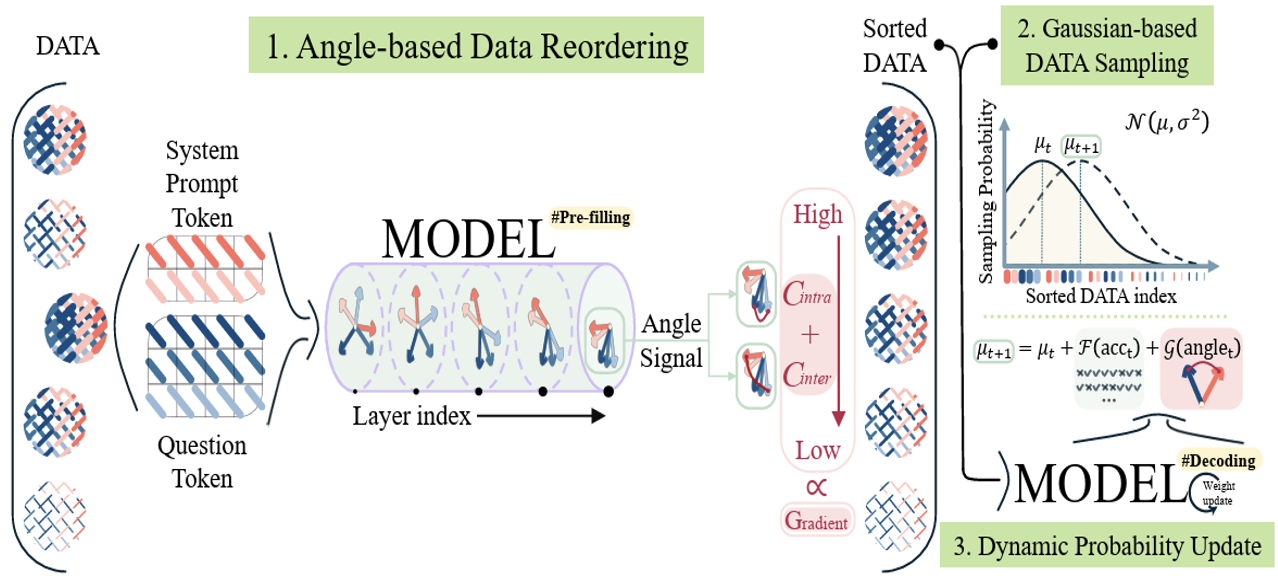

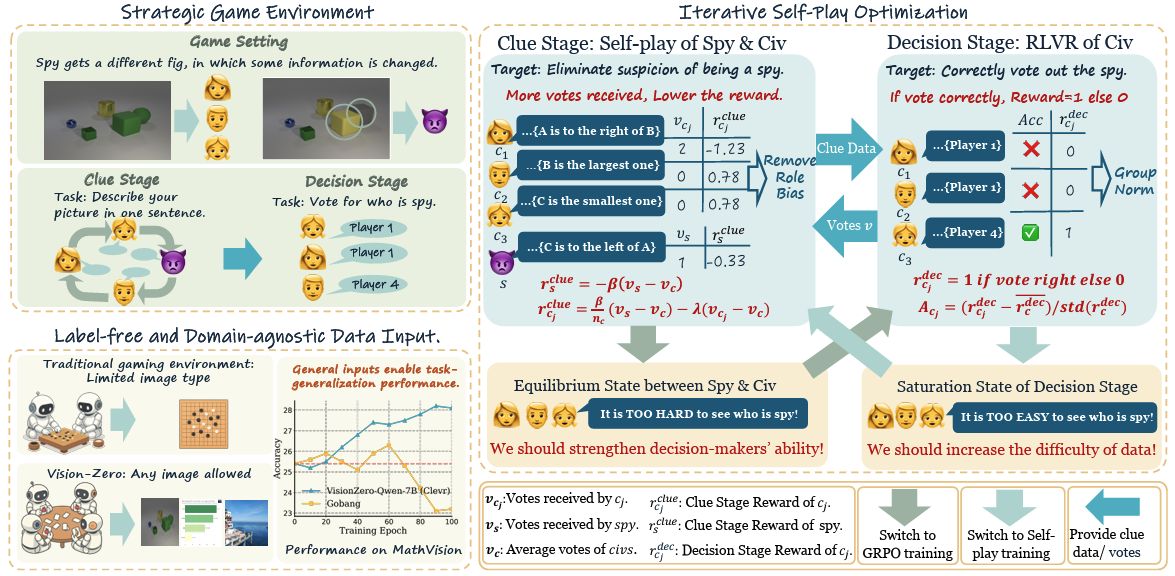

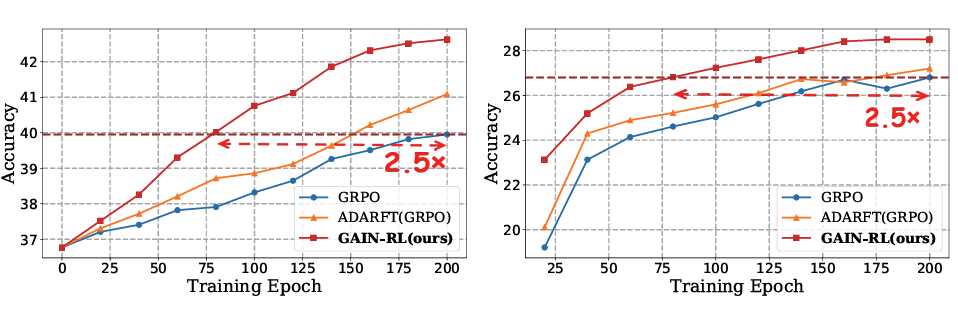

[ Apr 2025 ] : Our paper Angles Don't Lie: Unlocking Training-Efficient RL Through the Model's Own Signals has been accepted by NeurIPS 2025 (Spotlight)! Angles Don’t Lie empowers your model to actively select the data it truly needs—rather than passively consuming what it’s fed—accelerating training by an impressive 2 to 2.5 times! Code is released at project page.

[ May 2025 ] : I’ll be joining Adobe in San Jose as a summer research intern in 2025! Always open to coffee chats ☕😊 — feel free to reach out!

[ Apr 2025 ] : A little personal milestone :I’ve had first-author papers accepted at all three major AI conferences — ICML, ICLR, and NeurIPS! Cheers🎉!

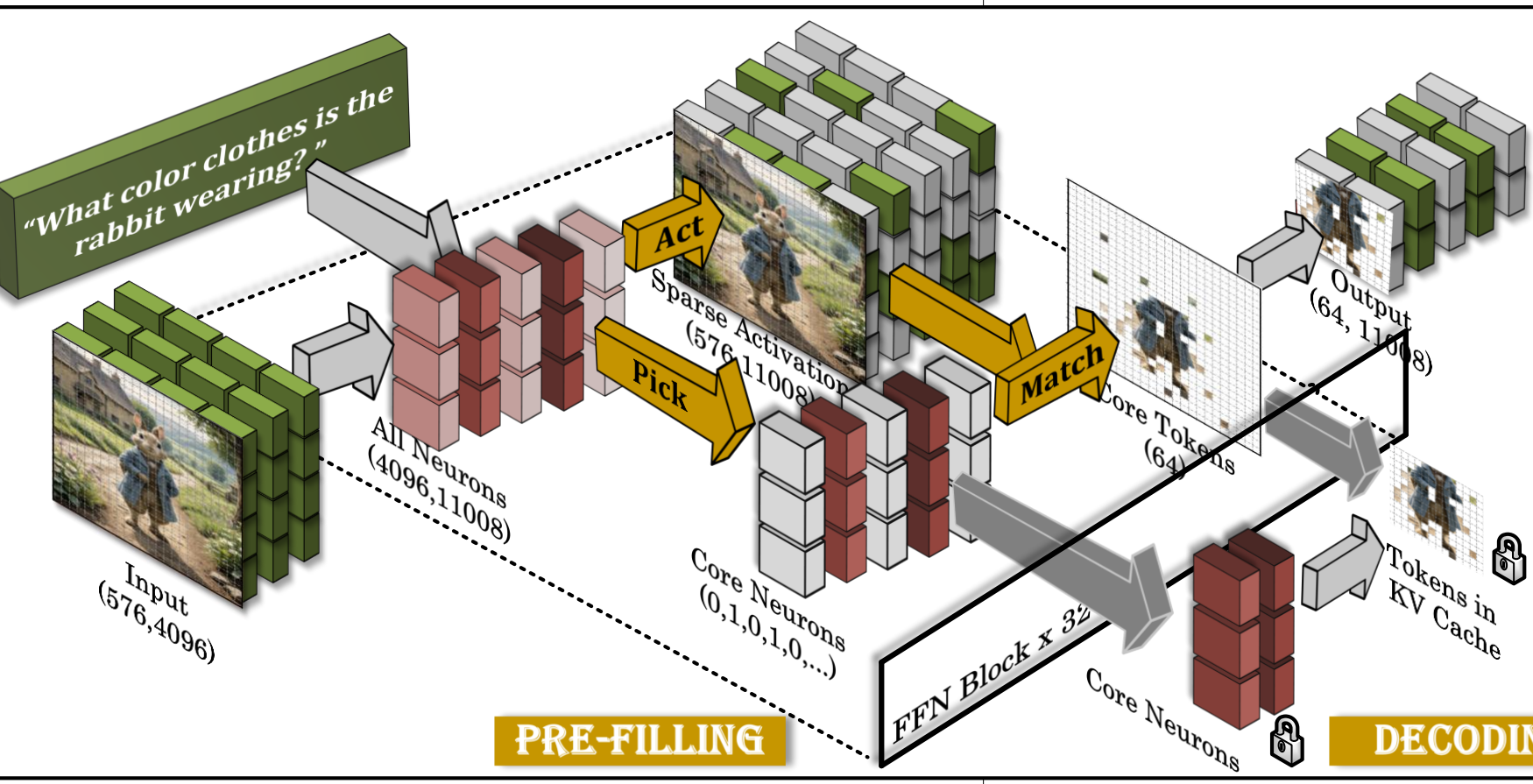

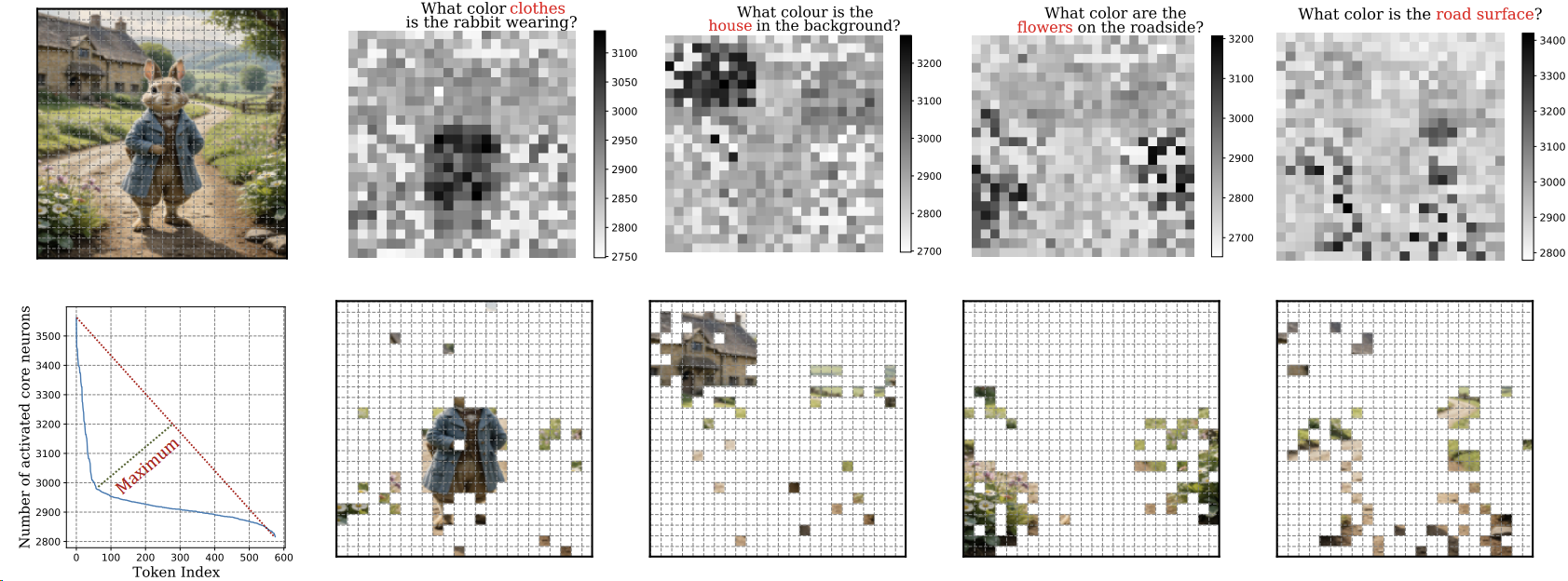

[ Apr 2025 ] : Our paper CoreMatching: A Co-adaptive Sparse Inference Framework with Token and Neuron Pruning for Comprehensive Acceleration of Vision-Language Models has been accepted by ICML 2025. CoreMatching essentially combines the two sparse modes of token and neuron in VLMs, and theoretically explains why cosin similarity is a better metric than attention score. Code is released at project page.

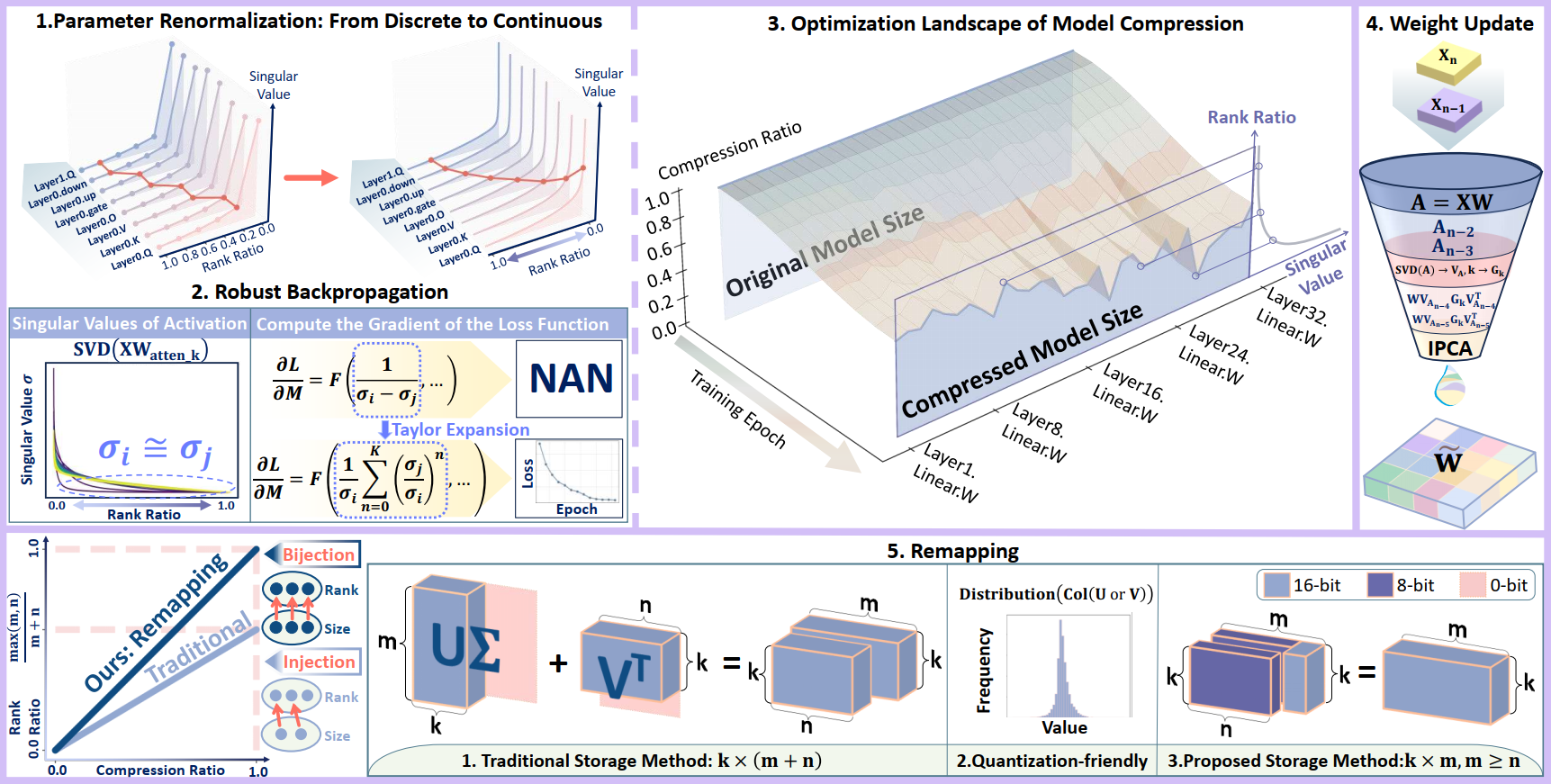

[ Feb 2025 ] : Our paper Dobi-SVD: Differentiable SVD for LLM Compression and Some New Perspectives has been accepted by ICLR 2025. Dobi-SVD is a novel LLM compression solution on low-cost computation devices! Visit the project page for more information.

[ Oct 2024 ] : Our paper CoreInfer: Accelerating Large Language Model Inference with Semantics-Inspired Adaptive Sparse Activation has been uploaded to arXiv. CoreInfer achieves a 10.33x speedup on an NVIDIA Titan XP without sacrificing performance! Visit the project page for more information.

[ Mar 2024 ] : I am excited to announce that I will join the Department of Electrical and Computer Engineering at Duke University as a PhD student in Fall 2024! Looking forward to my PhD life!

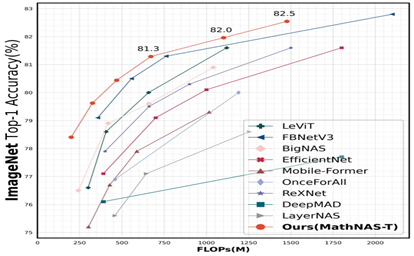

[ Sep 2023 ] : Our paper MathNAS: If Blocks Have a Role in Mathematical Architecture Design has been accepted by NeurIPS 2023. MathNAS achieves 82.5% top-1 accuracy on ImageNet-1k! See project page for more information.

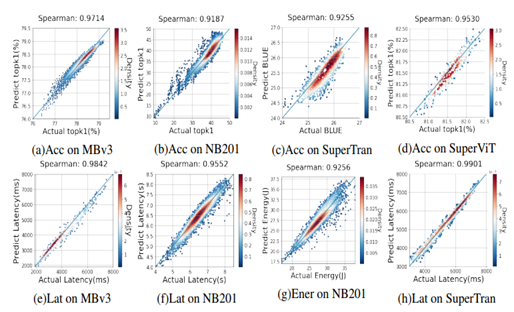

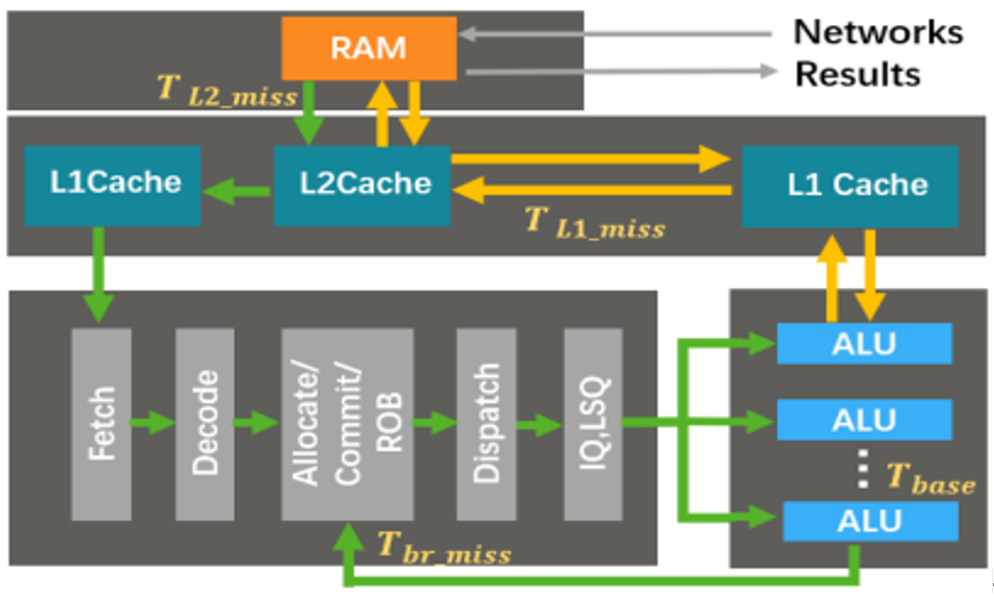

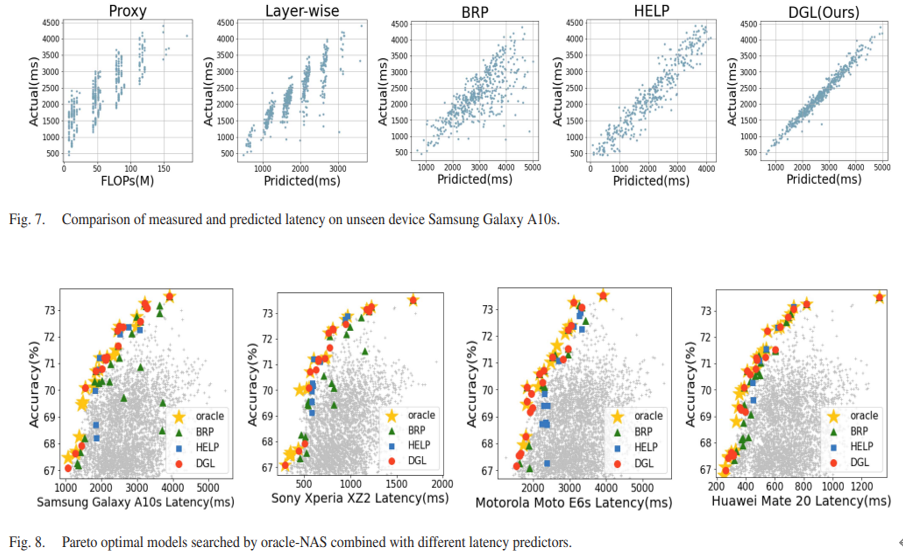

[ Apr 2023 ] : Our paper DGL: Device Generic Latency model for Neural Architecture Search has been accepted by the IEEE Transaction on Mobile Computing (CCF A). DGL is dedicated to accelerating the deployment of NAS on mobile devices, and has conducted experiments on 50+ different mobile phones! Visit the project code for more information.

[ Jul 2022 ] : I received my B.S. from Huazhong University of Science and Technology (HUST) and received the title of Outstanding Graduate !

[ Sep 2021 ] : I received the China National Scholarship (0.2%)! This is the highest award given by the Ministry of Education of China for undergraduates.